NVIDIA DGX Systems

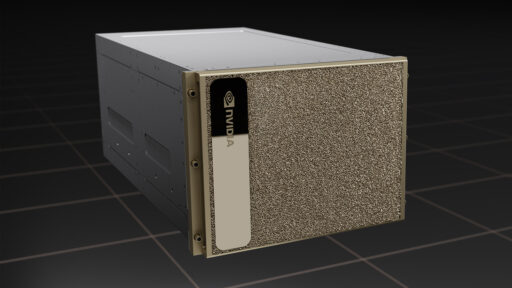

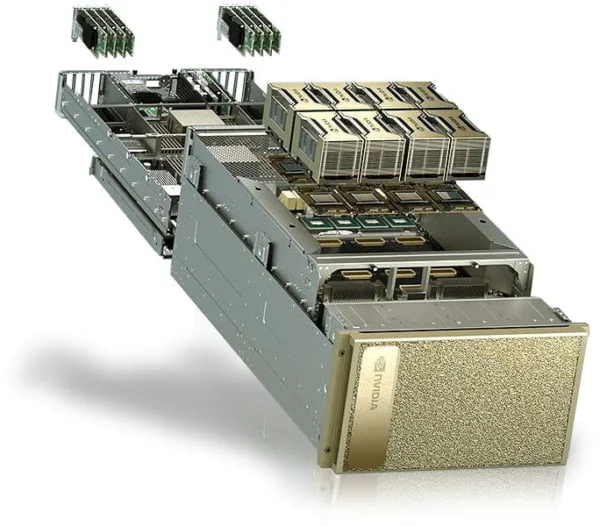

NVIDIA DGX H100 is a fully integrated hardware and software solution on which to build your AI Center of Excellence.

Built from the ground up the NVIDIA DGX platform combines the best of NVIDIA software, infrastructure, and expertise in a modern, unified AI development solution that spans from the cloud to on-premises data centers.

The NVIDIA DGX is unique for a variety of reasons, each contributing to its status as a pioneering solution in the AI and HPC landscape.

Proven reference architectures for AI infrastructure delivered with leading storage providers

NVIDIA DGX H100 is a fully integrated hardware and software solution on which to build your AI Center of Excellence.

NVIDIA DGX SuperPOD is HPC and AI on Data Center scale. It supports hybrid deployments and offers leadership-class accelerated infrastructure and performance for the most challenging AI workloads, with industry-proven results.

Let’s discuss how we can help you