NVIDIA GPU-SDK

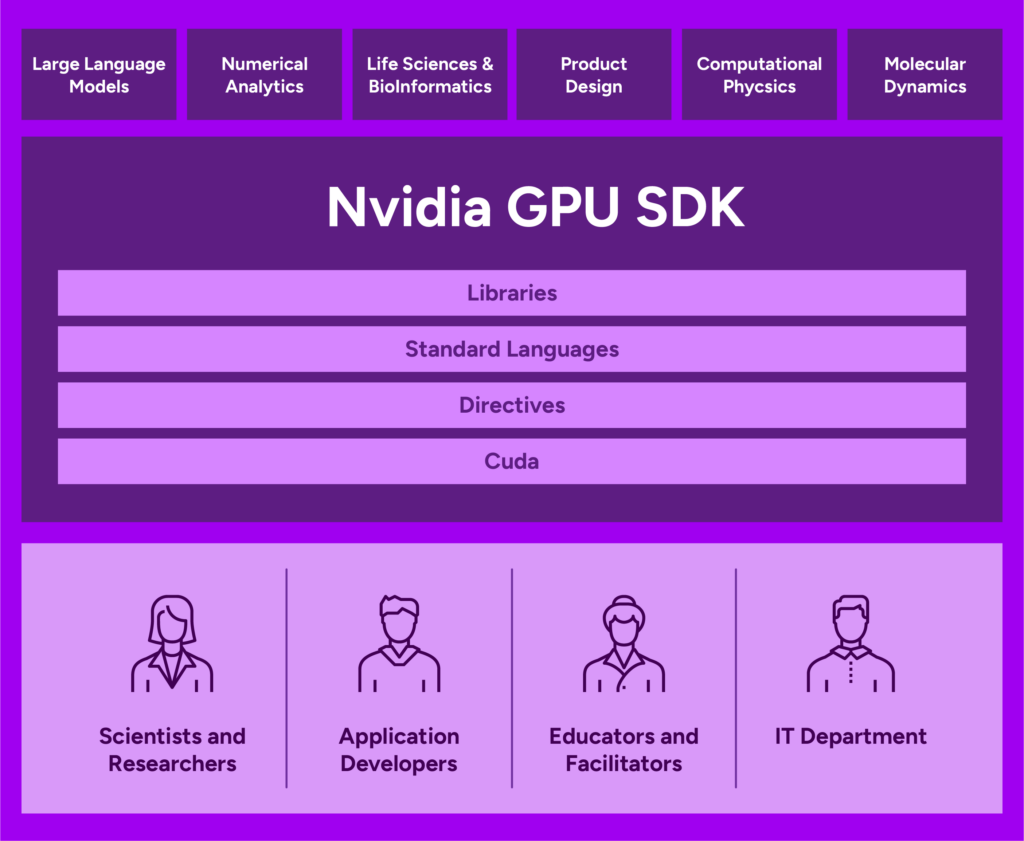

Optimized tools and frameworks are pivotal in delivering superior results in software development and AI implementation. They provide an efficient environment for developers and scientists to exploit the full potential of the underlying hardware, such as GPUs. This results in high-performing applications that can process large datasets swiftly and accurately.

In the world of AI, optimized frameworks can streamline the process of training and deploying models, making it easier to manage datasets, accelerate training times, and improve the accuracy of outputs.

Using optimized tools and frameworks enables your teams to create more efficient, powerful, and reliable software, leading to improved outcomes in terms of faster data processing, more accurate AI models, and more responsive applications.

Enable your Developers and Data Scientists by providing a suite of tools and frameworks designed to optimize code for GPU utilization and build Enterprise AI applications.

Let’s discuss how we can help you